No Installation Required, Instantly Prepare for the CCA-500 exam and please click the below link to start the CCA-500 Exam Simulator with a real CCA-500 practice exam questions.

Use directly our on-line CCA-500 exam dumps materials and try our Testing Engine to pass the CCA-500 which is always updated.

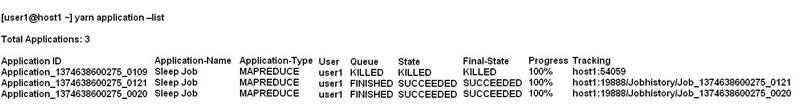

Given:

You want to clean up this list by removing jobs where the State is KILLED. What command you enter?

Correct Answer:B

Reference:http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.1-latest/bk_using-apache-hadoop/content/common_mrv2_commands.html

Which command does Hadoop offer to discover missing or corrupt HDFS data?

Correct Answer:B

Reference:https://twiki.grid.iu.edu/bin/view/Storage/HadoopRecovery

A user comes to you, complaining that when she attempts to submit a Hadoop job, it fails. There is a Directory in HDFS named /data/input. The Jar is named j.jar, and the driver class is named DriverClass.

She runs the command:

Hadoop jar j.jar DriverClass /data/input/data/output The error message returned includes the line:

PriviligedActionException as:training (auth:SIMPLE) cause:org.apache.hadoop.mapreduce.lib.input.invalidInputException:

Input path does not exist: file:/data/input What is the cause of the error?

Correct Answer:A

You have a cluster running with a FIFO scheduler enabled. You submit a large job A to the cluster, which you expect to run for one hour. Then, you submit job B to the cluster, which you expect to run a couple of minutes only.

You submit both jobs with the same priority.

Which two best describes how FIFO Scheduler arbitrates the cluster resources for job and its tasks?(Choose two)

Correct Answer:AD

You want to understand more about how users browse your public website. For example, you want to know which pages they visit prior to placing an order. You have a server farm of 200 web servers hosting your website. Which is the most efficient process to gather these web server across logs into your Hadoop cluster analysis?

Correct Answer:B

Apache Flume is a service for streaming logs into Hadoop.

Apache Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of streaming data into the Hadoop Distributed File System (HDFS). It has a simple and flexible architecture based on streaming data flows; and is robust and fault tolerant with tunable reliability mechanisms for failover and recovery.